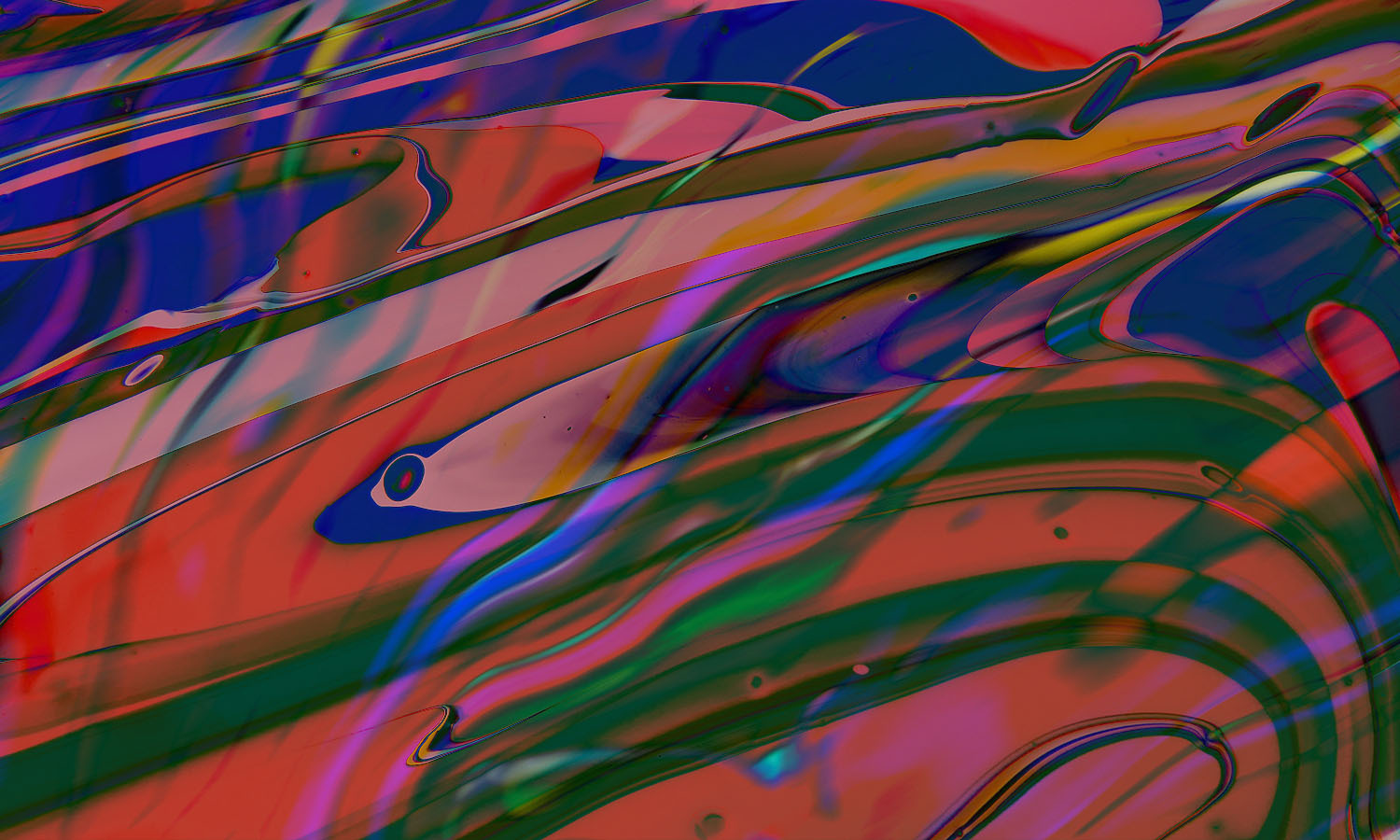

The nature of images, or if you may choose to call them so — artworks — generated during my brief foray into Midjourney can be described as synthetic dreams. There is a level of control beyond the experience of any lucid dream but the turning of the dream mind is just as unknowable. In process, I understand it as a powerful melding of vast data samples with attribute matching. In outcome, the way simple keywords transmute into strange yet familiar forms is overwhelming.

>Making the machine think is not the same way as making a human think. The prompts which lead to these images need to make everything explicit as there is no shared understanding. It has to be unlike dialogue, in terms of discreet and structured instructions which could then be matched and collated to synthesise the first drafts. Any number of drafts can be created. A better term to actually use here is 'inspire', as I felt quite unable to commission or request any specific image from the AI.

The journey from the first variants to the final picks.

Whereas I might have said 'a magnificent statue of Bastet in remarkable condition, maybe from 19th century, with gold details', to an illustrator whom I had barely known for ten minutes, here even hours after first contact I had to specify: 'bastet self-portrait, Egyptian god, Egyptian cat, sphinx, 50mm lens, photograph, photo real, hyper-realistic, onyx sculpture, lava and gold detail', and iterate over multiple rounds of variants until I struck lucky, though not quite in the way that I had imagined as it wasn't exactly any Bastet statue after all.

The quality of the images also varied wildly depending on the type of images and stylistic references I dispersed at the system. A simple illustration in a ligne claire style, followed by explicit mentions of Herge, Jean Giraud, atomic style and Moebius, all returned with incomprehension and aloofness, whereas I saw countless futuristic portraits in the style of genre concept artists I had never heard of being created with remarkable precision by other AI whisperers in the community Discord channel.

Missed attempts. Prompts: ‘closeup of a carnation flower, variegated texture, vibrant, translucent and opaque, made of mosaics and stones, surreal, hummingbird on a petal, sculpture, photograph of sculpture, photo real, hyper-real, verisimilitude, detailed texture’ and ‘drawing of two leopards discussing, herge, atomic style, flowers, silk hats’.

Midjourney wasn't the only AI I tried. There was a spell of DALL-E 2 experimentation and an ongoing attempt to locally install Stable Diffusion. These — and Google's Imagen — all use the machine learning model Diffusion to generate visuals from natural language input. The typed-in words are encoded in a process called transformation which takes not only the words but also the way they relate to each other, generating vectors which are then utilised to condition the image generation algorithm. It is this second aspect where the Diffusion Model comes in. It operates by taking the training data (vast image libraries that the model trains on) and continually adding noise to it until it completely becomes Gaussian noise, and then reversing the process to generate images. For any specific image generation prompt, it starts with a block of pure noise and denoises it under the conditions set by the text encoding to output semantically plausible images. The same principle is at work for generating variants and upscaling to the final image.

Attempts at creating some Columbia Road imagery; to the left, in the style of Henri Rousseau, and to the right, in the style of Sir Lawrence Alma-Tadema.

The appeal of current tools however is that one needs no understanding of what goes on behind the scenes and relies only on their imaginative sensibilities to create those stunning images we see on frenetic Twitter threads. There is a need to understand all the different parameters that can be fed to the system (I'm halfway through it myself) and also experiment with how these semantic relationships are defined textually. Each of the available AIs have their strengths and weaknesses too. Midjourney has given me the best outputs so far but DALL-E allows me to edit by erasing part of an image and typing in new prompts for those specific sections. It can also extend the Mona Lisa to a wide panoramic view. Imagen is rumoured to be the most photorealistic of them all while Midjourney the most artistic.

As of yet, I'm far too new to these to be dispensing advice on best practices but here are the strange visions it has provided me with so far, with the prompts that started the journey. Some of the best prompt-artists take days or months from the first prompt to arrive at their fruit so bear in mind these are just starting points. And to address that pressing question of how this might replace traditional illustrators — as a creative director of sorts I can certainly see that possibility, but at the same time it's impossibly laborious to produce anything exact or consistent, so as I described at the beginning, I mostly see them as dream material to start creating.

The most philosophical output so far. Prompt: ‘ape with the body of a dinosaur, gorilla face, t rex body, furry, claws, glaring, intense eyes, moody, scary, foggy, atmospheric, dusk, mountains in the background, monochrome, desaturated, 40mm lens, medium shot, crazy realistic, photograph, photo real, hyper realistic, vfx, in the style of king kong movie’

Magnificent though not what I had in mind. Prompt: ‘buddha self portrait, buddhism, gautama, lotus blossom on hand, graceful, calm, 50mm lens, photograph, photo real, hyper realistic, lapis lazuli sculpture, gold detail, detailed, intricate’

Surreal vistas. Prompts: ‘a boy holding a tree in the middle of a desert and crying, sunset, warm palette, wide vista, vultures flying in the sky, empty landscape, 35mm lens, photo real, hyper real, photograph, verisimilitude’ and ‘a forest half submerged in a lake, humans swimming, top of trees above water, birds on branches, night, wide angle, cinematic, starry sky, clouds, cool palette, life of pi, ilm, photo real, hyper real, ultra detailed’

Dapper beasts. Prompts: ‘kitty volante self portrait, glorious, optimistic, shiny, 85mm lens, photo real, ultra realistic, hyper realistic, vfx, weta, ilm, pixar’ and ‘portrait of a goat, cashmere goat with black hair, goat wearing tweed jacket + goat wearing optical glasses, highly detailed tweed jacket, looks like oxford professor, smiling radiantly, mountains in background, hd, 8k, photo real, hyper realistic, ultra detailed’.

Young and old.Prompts: ‘indian girl, photograph, monochrome’ and ‘98 year old finnish woman smiling, thick hair highly detailed, sharp highly detailed hazel eyes. Colourful nordic sweater. Lake in the background with a cottage. Photograph, photo real, hyperrealistic, ultra realism, hd, 8k, octane render, vray, Autodesk’.

The Data Handbook

How to use data to improve your customer journey and get better business outcomes in digital sales. Interviews, use cases, and deep-dives.

Get the book