The Data Handbook

How to use data to improve your customer journey and get better business outcomes in digital sales. Interviews, use cases, and deep-dives.

Get the bookThe good, the bad, the shiny – Let’s take the AI for a spin. Here’s how I created an improved user interface for ChatGPT using ChatGPT.

How to see beyond the AI hype?

Large Language Models (LLMs), especially ChatGPT, have gotten much attention in the media. I use it a lot to cross-check my ideas and bounce concepts around. It sure is impressive, even with all the flaws and hiccups.

My problem is that it’s all speech. Honestly, speech is cheap. Natural language is ambiguous and open to interpretations. The actual meaning behind the words is open to multiple interpretations that depend on the context and hidden assumptions. Code, on the other hand, is formal and subject to certain rules. It works, or it does not work. Sure, it can be more or less efficient, but there is a simple yes or no question. Will it meet the functional requirements?

In technology, there is a difference between knowing and doing

Actually doing things hands-on gives you a completely different understanding of a topic. There’s a reason it is called fingertip feeling. It describes excellent situational awareness and the ability to respond appropriately and tactfully. In other words: if you have never pulled your hair while fighting with a compiler, your understanding of how software is made is theoretical at best.

I have done my share of school projects. Since my graduation, I have not written any serious code beyond the occasional SQL query or trying to make a JavaScript presentation framework reference implementation go BRRRT. The fact is, I am out of touch, and my knowledge is outdated at best when it comes to technical details.

After some reading, I learnt that the ChatGPT API provides access to configuration options that are not directly visible at the prompt. Sure, you can always ask it to behave in a certain way, but exploring the configurations directly might be convenient. I was also interested in programmatic preloading of contexts and definitions of what the output should look like. Not surprisingly, I did find a tool provided by Open AI that demonstrates how the changes in configurations affect the output. The solution did not meet all of my needs. I also wanted to push myself a bit and see if I could make a solution of my own. In layman's terms, I wanted to create a solution of my own that would generate presentation storylines and content using certain structures. If I could base it on templates and tweak it on the fly, I could use it for proposals and even project deliverables. So, a new and improved interface for ChatGPT.

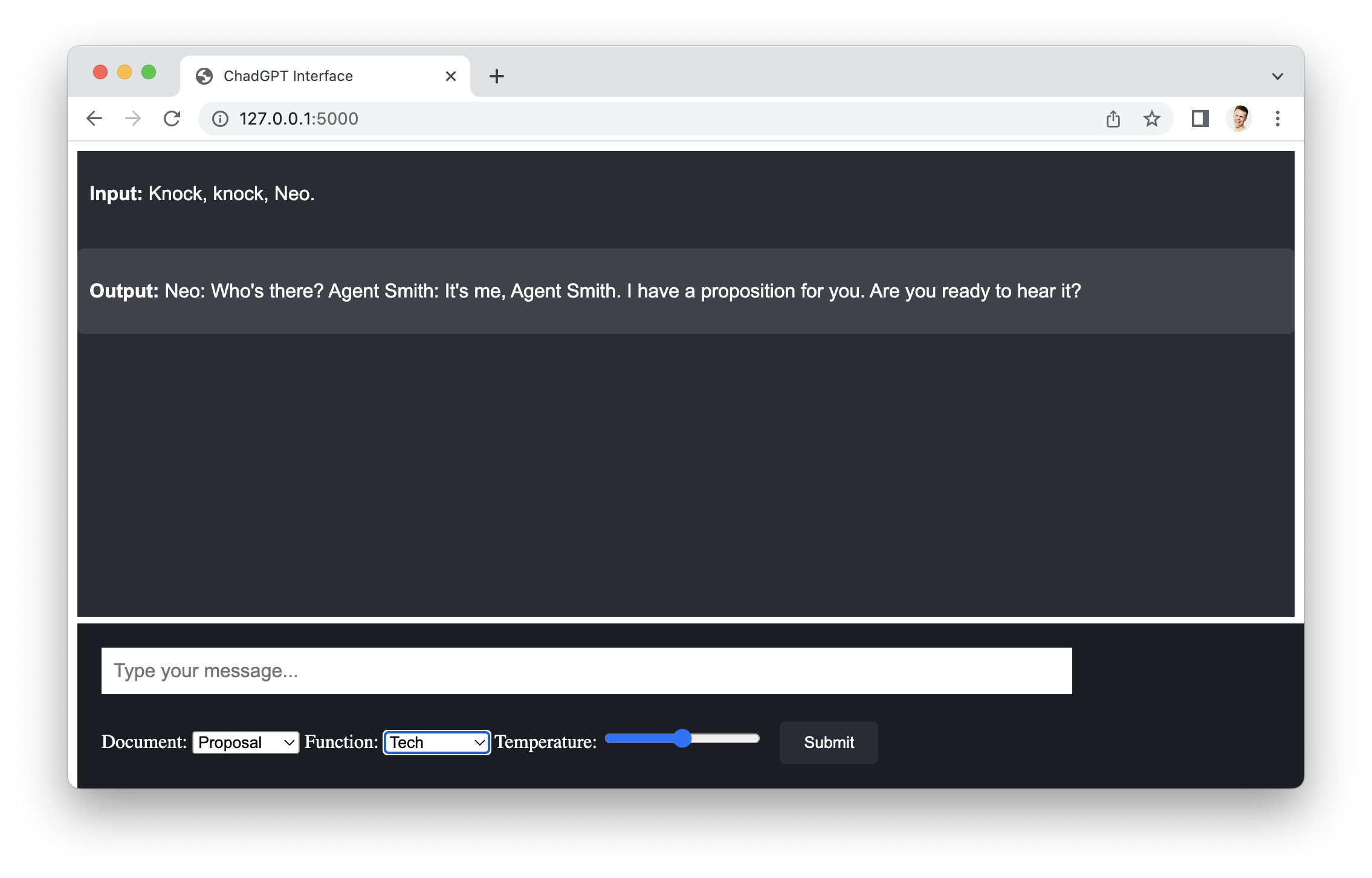

Why don’t I just describe what I want and let the language model do the work? I had already learnt that ChatGPT could be given a lot more context, and the behaviour could be adjusted more via the API. I could take the tool to the next level and make myself an advisor who I can configure on the fly. A simple listing on the dialogue, an input box, some dropdowns and maybe a slider or two will do nicely as an interface. How hard can it be? Right.

Setting up a project was the easy part

After a brief dialogue with GPT version 3.5, I made some decisions on the project setup. As for the project setup, I mean making choices on the tools, components, and how to organise the work. I soon learnt that I needed a lightweight framework for creating dynamic web pages. I was recommended to try the Flask framework for this purpose, and it looked fine. While at it, Python seemed relatively simple but powerful for a programming language, and there was good support for it with Visual Studio Code.

The first problem was that after describing what I wanted, ChatGPT-generated a solution skeleton that had loads of overhead. I had to question what all the directories were, why I needed to create a load of files, and is a virtual layer really necessary to avoid problems with dependencies. I wanted my solution to be as simple as possible. The suggestions were far from it.

I learnt that these recommendations were considered the best practices. While they might be completely valid, I was not writing production-grade software. I had to explain that this was a pet project for personal use. Code should be generated only against a specific requirement. After some trials and errors, I was able to create a simple enough Hello World! I had three files: the Python source code, an HTML template, and a CSS file. I was able to run the Flask solution from a command line and managed to keep my sanity in check. So far, so good.

Things become complicated if you let them to

First, I wanted to create a proper chatting interface. A simple input box that stretches vertically if multiple lines are inputted. At this point, it’s fine to just write the output as the input to get the interface working nicely. LLM did suggest going for the API immediately. As the old saying in Project Management goes: “We’ll burn that bridge when we get there”

When working with LLM in code generation, it is a good idea to keep the requirements simple and present them one at a time. Even then, it tends to generate unnecessary clutter. For some reason, it claims that resizing the input element requires adding multiple event listeners in the script part of the HTML file. Some experimentation reveals that only one is actually needed. Adding more of them actually took the focus away from the input box after submitting the form. Ensuring that enter key submits the form while shift+enter adds a new line needs another event listener. Not three of them. Even with my outdated expertise, I was able to make reasonable guesses on how the code behaves and modify it. For someone who has never touched an IDE, this would be a lot harder.

When the interface finally worked, it was time to let go of the look’n’feel from the 90s. I asked LLM to generate a futuristic style for my solution. CSS is used to define the styles of web pages. The minimalist CSS file I had contained definitions for three things. Chat GPT generated tens of additional definitions. It seemed to be thinking that every level of heading needs a definition. Out of the six, I used only one, and it happened to be commented away. Nevertheless, I had to test what it looked like. Despite the small bug of input and output overlap, I got what I asked for. It sure was futuristic! The designers of the future just happened to have their aesthetics dialled to a movie from the 80s. On top of it all, every text was in a different size and font. I think I asked it to “go nuts”, but this was a bit too nuts even for my taste. After some iteration, I ended up with a small set of definitions. It looked nice enough, and it worked the way I intended. I was even more impressed with it.

Here's how my ChadGPT ended up looking like!

Calling the API was simple - if you just know how to do it

There’s no way of prolonging the inevitable. Playtime is over. It was time for me to try to interface with the REST API Open AI provides. I asked ChatGPT to generate the necessary code, and it gladly obeyed. At this point, I can also tell that the Visual Studio Code is neat. Like, really neat. It actually helps me a lot with what I am trying to do. Copy-pasting code around and even writing some are supported in many ways. It points out obvious errors before I even make them and helps me correct them on the fly. Back in the day, Eclipse was heavy and far from the lightning-fast elegance VS Code provides.

Unfortunately, the instructions I got are outdated. The pages for generating API keys do not exist anymore, and what I imported has evolved. Reading just the API documentation was a bit too much (I’d like to see the examples just with one language at a time, thank you), but a couple of helpful blogs take me forward. Even if the cutoff date was before the API updates, ChatGPT is quite helpful in debugging. You just give it the code you work with and the error, and it tells you what to do. And I must say, the IDE helps you resolve a lot of problems before you get that far. Interestingly, ChatGPT sometimes generates buggy code. When asked to review it, it might say that there seems to be an import missing. Well, thanks for helping me fix the thing you created.

ChatGPT also learnt that I was not that interested in explanations of generated code. The value proposition for me is that I can skip the nuts and bolts and focus on the business logic. It also learned that I am not that interested in individual lines of code or examples, but clear instructions on replacing entire code blocks. I must admit that the JavaScript parts of the app were a bit voodoo at some point. When I got far enough, I just updated the code blocks without reading them and experimented with the functionality. I did refactoring at the end, though. I also learnt that it is a good idea to give ChatGPT and update every now and then. It means pasting all the source to it and telling it that this is the current code base. My process would probably make many real programmers roll their eyes. I can also imagine that a lot of software is created with a mindset that is not too far from how I am acting. My goal is to create a working solution with as little overhead as possible.

Finally, after much trial and error, it worked! I got a JSON object back listing all available LLM models and printed it on the console. I started to understand why a programmer friend of mine once said that the Hello World is the most difficult part. After that, it is just building layers on top of it. I managed to get there. The rest was just rearranging things. This is the fingertip thing I mentioned before. Getting the interface to reply to a chat really was actually easy.

The needed Python code is 30 rows with comments and empty lines in between. It’s pretty neat. The HTML is double that, and the CSS is something in between. It’s not that much, but I wanted to keep it tight. It’s obvious the LLM is not yet quite ready to receive a set of user stories and generate a complete solution out of it. Far from it, and at the same time, not that far. If you want to see how good it is, paste this blog text to the LLM and ask it to generate the code based on this. Try it, and you might get surprised.

The software business is going to take a huge leap forwards

No question about it. My background helped a lot. Creating working software is easier than ever, but not for anyone. My experience in tech sales, writing requirements, creating specifications, leading software projects, and a moderate understanding of Enterprise Architecture makes it a completely different game for me. I see a need for defining loosely coupled, modular, compartmentalised components that do not affect other components when changed. When a skeleton and interfaces are available for these modules, it will be ridiculously easy to generate the business logic inside them. At the end of the day, the power of LLM comes from it being able to process the natural language into code. The process might be messy and require a lot of iteration for now. Think what it will look like in a couple of years.

It is a revolution we are witnessing. It’s happening right now.

The Data Handbook

How to use data to improve your customer journey and get better business outcomes in digital sales. Interviews, use cases, and deep-dives.

Get the book